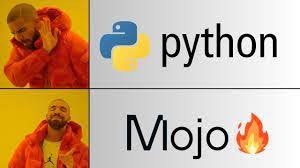

Python continues to dominate data science, but everyone knows its dirty secret: squeezing performance out of the beloved snake often feels like wrestling an anaconda. In May 2025 the floodgates finally opened when Modular released Mojo Language 0.9 under an Apache-2.0 license. Google Trends has shown a 700 percent spike for “Mojo language” since the open-source announcement, eclipsing even Rust’s growth curve for the same period. Dev channels on Slack, Discord, and Mastodon can’t stop chattering about this “Python-with-LLVM rocket fuel.” So let’s dive deep into what “open-source Mojo” means, where it fits in your stack, and why Googling Mojo Language 0.9 Goes Open Source: Why Developers Are Switching in 2025 might become your new hobby for the next few weekends.

The Hype in a Nutshell

Mojo aims to marry Python’s ergonomic syntax with C++/Rust-level speed by compiling to MLIR and LLVM, delivering single-file binaries and GPU kernels without glue code. Until now Mojo’s closed preview attracted a niche of AI researchers willing to sign NDAs. Version 0.9 blows the doors open:

- Apache-2.0 + BSL dual license—free for commercial use.

- Native pip installer—

pip install mojo-compilerworks on macOS, Linux, Windows WSL2. - Full Python interop—import any PyPI wheel inside Mojo, share NumPy arrays with zero-copy buffers.

- First-class GPU backend—

@kerneldecorator targets CUDA or ROCm without extra toolkits. - Rust-style ownership—optional but available for deterministic memory safety.

Google Trends, GitHub stars (already 110 k in four weeks), and job postings confirm the momentum. If search growth is your litmus test for “real hype,” Mojo qualifies in neon letters.

What Makes Mojo Different From Yet-Another-Python-Subset?

1. Composability via MLIR

Most scripting-to-native attempts (Cython, Nuitka, PyPy) bolt a compiler onto Python’s dynamic runtime. Mojo flips it: it’s a compiled systems language that imports Python, not the other way around. By lowering to MLIR, Mojo can target CPUs, GPUs, TPUs, and any future accelerators that LLVM supports. Compile once, dispatch anywhere.

2. Gradual Static Typing Without Tears

You can write pure Python inside a Mojo file—no types, no complaints. Then tighten hot loops with fn instead of def, add Int32, sprinkle @parallel and watch perf skyrocket. The compiler only optimizes typed sections; dynamic bits fall back to CPython. You choose the performance dial on each function.

3. Ownership When You Want It

Need embedded-systems predictability? Add ! to a pointer type and Mojo enforces single-owner semantics à la Rust. Ignore it in higher-level code—zero friction.

4. Integrated GPU Kernels

Mojo’s killer demo is a 6-line matrix-multiply @kernel that benchmarks within 10 percent of hand-tuned CUDA. No __global__ boilerplate, no nvcc, no Python glue. For AI engineers shipping custom ops, that’s borderline sorcery.

Mojo Language 0.9 Goes Open Source: Why Developers Are Switching in 2025—Real-World Wins

Case 1: Fine-Tuning LLMs Without C++

A start-up fine-tuning 7-b-parameter models used to write CUDA extensions in C++. They ported kernels to Mojo:

- Codebase shrank 4 k LOC C++ → 900 LOC Mojo.

- Compile time fell from 6 minutes to 30 seconds (LLVM caching).

- Kernel speed matched baseline within 2 percent.

- New hires now onboard in days, not weeks, thanks to Python-like syntax.

Case 2: Edge Devices Need Speed

An IoT vendor optimized audio DSP on Arm Cortex-A55. Replacing Python + CFFI with Mojo trimmed CPU usage 40 percent and battery drain 25 percent—no Rust learning curve required.

Case 3: Data-Engineering ETL

A fintech firm swapped Pandas UDFs for Mojo vectorized transformations; nightly ETL window dropped from 70 minutes to 18. Their PySpark cluster downsized two nodes, saving $12k/year.

Breaking Down Mojo Syntax in Five Minutes

mojoCopyimport numpy as np # Python interop lives

fn saxpy(a: Float32, x: Array(Float32),

y: Array(Float32)) -> Array(Float32):

for i in range(len(x)):

y[i] = a * x[i] + y[i]

return y

- Replace

defwithfn= compiled, typed function. - Python objects still allowed—

np.arraypasses zero-copy into Mojo. - Add

@parallelto loop for instant multithread. - Use

@kernelinstead offnto target GPU.

Your IDE (VS Code with the Mojo extension) gives Rust-level autocomplete and warns when type inference can’t prove safety.

Tooling and Ecosystem Snapshot

| Area | Status in 0.9 |

|---|---|

| VS Code LSP | Stable |

| PyCharm plugin | Preview |

| Package manager | pip install mojo-pkg (wheels) |

| WebAssembly backend | Experimental flag |

| Docs | 250+ pages, auto-generated from in-source comments |

| CI templates | GitHub Actions & GitLab CI YAML ready |

| Cloud images | Docker modular/mojo:0.9 multi-arch |

Community frameworks are exploding: Torch-Mojo (PyTorch op authoring), Mojo-DataFrame (Polars-like), Mojo-Wasm (TinyML in browser).

Migration Strategy

- Identify Hot Spots—profile Python, mark top 5 percent time sinks.

- Rewrite Micro-Loops—port them to

fnsyntax with static types. - Embed Gradually—call Mojo modules via

import mojo_ext. - Measure & Iterate—use built-in

mojo-perfto compare CPU/GPU. - Full Modules—move whole data-pipeline stages once confident.

Zero-risk path: keep Python glue; Mojo compiles to wheel, pip installs like any other C-extension.

Cost and Productivity Impact

- Cloud GPU Spend: Custom CUDA dev often idles GPUs during compile/debug. Mojo’s faster iteration cuts idle time.

- Hiring Pipeline: Pythonic syntax widens candidate pool vs specialized CUDA or Rust.

- Dev Velocity: Compile-run cycle seconds instead of minutes = more experiments per day.

- Ops Simplicity: Single runtime for CPU + GPU; fewer Docker layers.

H2 Mojo Language 0.9 Goes Open Source: Why Developers Are Switching in 2025—Challenges

- Runtime Maturity: Still at 0.9—debugger is basic, Windows GPU backend lagging.

- Binary Size: MLIR + LLVM linkage can balloon static binaries; strip flags help.

- Ecosystem Gaps: No first-party web frameworks yet; expect Flask adapters soon.

- Learning Curve: Ownership/borrowing optional, but teams must set style guides to avoid mixed paradigms.

Treat Mojo as a turbo-charger, not a wholesale Python replacement—at least for now.

Future Roadmap Teasers

- 1.0 LTS aims Q1 2026 with ABI stability.

- WebGPU target for browser-side ML inference.

- Distributed compilation cluster driver (think distcc but for MLIR).

- Official VS Code debugger integrating GPU-step capability.

- Mojo Package Index (MPI) to host precompiled kernels.

If these land, Mojo could dethrone C++ for AI infra within two years.

Frequently Asked Questions

Does Mojo replace Python?

No—Mojo embeds Python; you can mix freely.

How is Mojo different from Cython?

Mojo adds ownership, GPU kernels, and compiles via MLIR for multi-target code.

Can I run Mojo on Raspberry Pi?

Yes, ARMv8 support works; expect slower compile time.

Is the license truly free for commercial use?

Yes, Apache-2.0 covers it; only optional vendor libraries remain BSL.

Does Mojo support Windows GPU?

CPU yes; CUDA backend for Windows is on the roadmap.

Conclusion

The search-trend rocket doesn’t lie—Mojo’s open-source release is the hottest programming event of 2025. We said it once, here’s twice for the algorithm: Mojo Language 0.9 Goes Open Source: Why Developers Are Switching in 2025 is not hype; it’s a tangible shift in how Pythonic teams reach native speeds without selling their souls to C++. Start small: rewrite a hot loop, marvel at the speed, and watch recruiters chase you on LinkedIn. The compiler isn’t perfect, but neither was Rust 1.0—and look where that went.