Moving from a monolith to microservices can dramatically improve agility and scalability, but it also introduces hidden complexities—from ensuring consistent data across many services to preventing a sprawl of endpoints that hamper maintainability. Below, we examine common pitfalls devs encounter when adopting microservices, paired with real-world solutions and best practices to keep your architecture manageable.

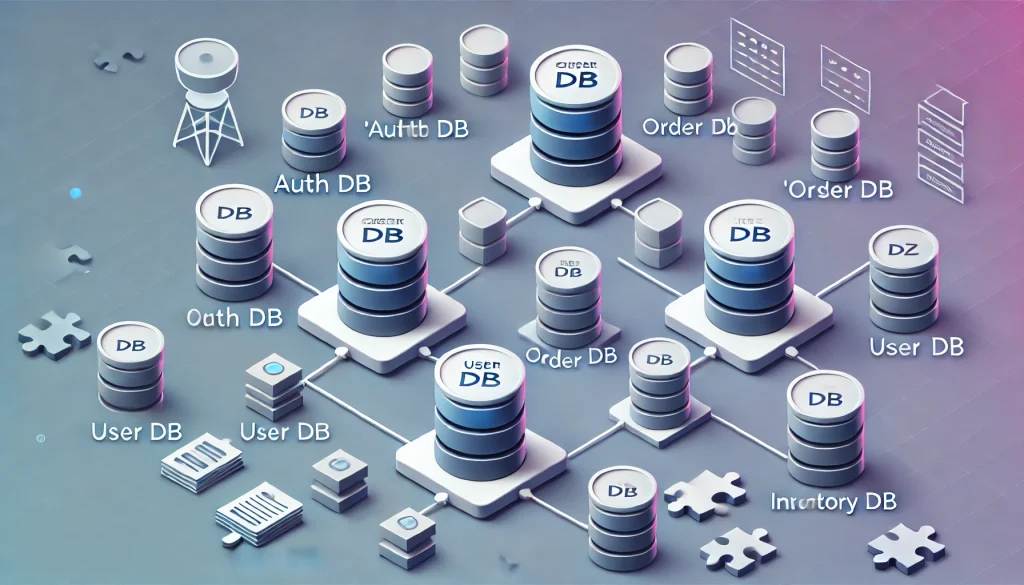

1. Data Consistency Across Services

The Pitfall

- Distributed Data: Each microservice often has its own database or data store, risking duplication or inconsistency.

- Eventual Consistency: Real-time synchronous updates are challenging, leading to potential data drift.

- Complex Transactions: Multi-service transactions can’t rely on a single ACID DB transaction, requiring sagas or advanced orchestration.

Mitigation Strategies

- Define Clear Boundaries: Identify subdomains or contexts so each service “owns” its data. Overlapping data remains read-only or must be updated via events.

- Event-Driven Patterns: Use message brokers or event buses (Kafka, RabbitMQ) to broadcast changes. Recipients update local views eventually.

- Sagas: Manage distributed transactions by orchestrating a sequence of local transactions—if a step fails, the saga triggers compensating actions.

Pro Tip: For simpler systems, a partial monolith approach (like a single database with carefully segregated schemas) can reduce overhead if your dev team is small or lacks event-driven expertise.

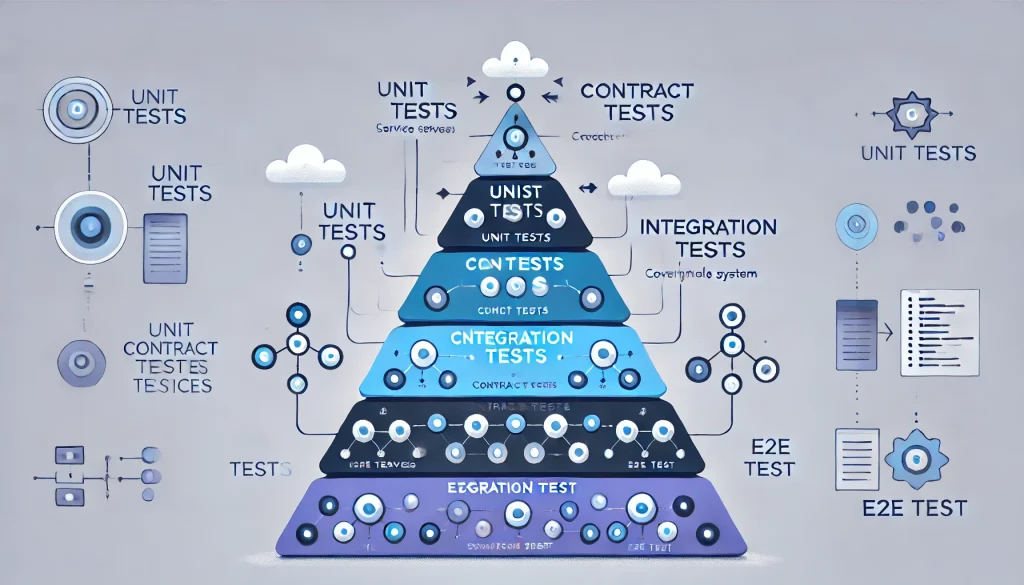

2. Testing Complexity in Microservices

The Pitfall

- More Services, More Tests: Integration tests multiply—services must be tested in isolation and collectively.

- Mocking & Stubs: Realistic service interaction is tricky; mocking everything can lead to coverage gaps.

- End-to-End (E2E): Setting up an environment with all services plus data stores can be time-consuming or resource-heavy.

Mitigation Strategies

- Layered Testing:

- Unit Tests: Validate local logic.

- Contract/Consumer-Driven Tests: Ensure service interfaces match expectations for client consumers.

- Integration Tests: Confirm multiple services communicate properly, possibly using ephemeral containers.

- E2E Tests: Final check with a fully deployed environment, often in staging or ephemeral CI pipelines.

- Test Containers: Tools like Testcontainers or Docker Compose help spin up ephemeral services for integration tests.

- Consumer-Driven Contracts: Pact or similar solutions define interactions so if a provider changes, consumer tests highlight breakages early.

Pro Tip: Start small—extract a single service, test from dev environment to staging. Once you’re confident in your layered approach, you can expand microservices one domain at a time.

3. Overhead & Complexity in Service Sprawl

The Pitfall

- Too Many Services: Breaking down a monolith too aggressively can lead to a labyrinth of endpoints, each with minimal logic.

- Network Overhead: Each API call is network-based, introducing potential latency, retries, or partial failures.

- Operational Burden: Logging, tracing, monitoring, and security for many small services is more complicated.

Mitigation Strategies

- Right-Sized Services: Start with a modest number of services matching domain boundaries (bounded contexts). Resist the urge for microservices that are “too micro.”

- API Gateways: Centralize cross-cutting concerns like authentication, rate limiting, or request transformation. Minimizes endpoint complexity.

- Observability Stack: Implement distributed tracing (Jaeger, Zipkin), logs aggregator (ELK, Splunk), and metrics (Prometheus, Grafana) for cross-service visibility.

Pro Tip: Some teams adopt a “modular monolith” approach, gradually peeling off critical modules. This ensures you don’t overshoot into a microservices “sprawl.”

4. Versioning & Compatibility

The Pitfall

- Breaking Changes: Each service evolves at its own pace, possibly introducing incompatible APIs for older consumers.

- Coordination: Rapid releases across tens of services can confuse testing and environment stability.

- Backward Compatibility: Failing to provide old endpoints while new ones roll out might break older clients.

Mitigation Strategies

- API Versioning: Mark new endpoints with a versioned path or param (e.g.,

/v2/orders). Deprecate older versions gradually. - Feature Toggles: For partial new logic or backward-compat behaviors, toggles can limit risk to a subset of traffic or only new consumers.

- Release Coordination: Some use a release train approach or trunk-based dev with short-lived feature branches, ensuring each service aligns with a minimal concurrency of big changes.

5. Domain Boundaries & Bounded Contexts

The Pitfall

- Unclear Service Boundaries: A microservice that bleeds into multiple domains leads to tangled dependencies.

- Difficult Ownership: Overlapping responsibilities hamper autonomy and hamper data consistency.

- High Coupling: If services rely on each other’s data or logic, you reintroduce monolithic constraints.

Mitigation Strategies

- Domain-Driven Design (DDD): Identify subdomains, ensuring each microservice focuses on a single “bounded context.”

- Clear APIs: Services share minimal data—only the domain-level interactions needed.

- Context Mapping: Tools like event storming or domain mapping clarify how bounded contexts relate, preventing confusion.

Pro Tip: Regularly refactor domain boundaries if a service grows or merges domain responsibilities. This might be less painful than ignoring domain mismatch, which fosters tech debt.

6. Orchestration vs. Choreography

The Pitfall

- Over-Orchestration: A central orchestrator microservice might become a new monolith.

- Chaos: Fully choreographed systems (services react to events with no central control) can be too decentralized or unpredictable.

Mitigation Strategies

- Hybrid: Use an event bus or queue for certain flows, plus orchestrators for critical transaction sagas that need global insight.

- Domain Events: Publish domain events to reduce direct coupling. If one service must coordinate multiple sub-steps (like Payment, Invoice, Notification), an orchestrator or saga pattern might help.

- Monitoring: With decentralized event flows, ensure strong tracing so devs see the cross-service lifecycle of a single user action.

7. Security & Access Control

The Pitfall

- Multiple Endpoints: Each service has an API. If not locked down, each is an attack surface.

- Inconsistent Policies: Hard to unify auth if each microservice implements it differently.

- Token Sprawl: Passing JWT or session tokens among microservices can get messy.

Mitigation Strategies

- API Gateway / Service Mesh: Enforce auth, SSL, rate limiting in a single gateway or sidecar.

- Central Auth: Possibly adopt OAuth2, OpenID Connect, or a dedicated auth server (Keycloak, Auth0) for consistent tokens.

- Zero Trust: Services communicate only via mTLS and identity-based policies. Minimizes internal service infiltration risk.

Pro Tip: Logging security events consistently across all services helps if a breach or suspicious pattern emerges.

8. Observability & Debugging

The Pitfall

- Scattered Logs: Logs are spread across multiple containers and services. Searching for user sessions can be tough.

- Partial Failures: Hard to see the “big picture” if a single microservice fails.

- Slow Tracing: Without distributed tracing, devs can’t quickly pinpoint where a request stalled among many microservices.

Mitigation Strategies

- Central Logging: Tools like ELK Stack (Elasticsearch, Logstash, Kibana) or Splunk unify logs.

- Distributed Tracing: Zipkin, Jaeger, or OpenTelemetry to map request flow across services.

- Service-Level Dashboards: Use Prometheus or other metrics to track service health, throughput, error rates. Investigate anomalies early.

9. Overengineering or “Microservices for Everything”

The Pitfall

- Team Overhead: Maintaining many tiny services without real domain reasons can hamper velocity.

- High Infrastructure Costs: Additional containers, orchestrations, service meshes can balloon resource usage and cloud bills.

- Simpler Solutions: Often a modular monolith or fewer, well-defined services is enough.

Mitigation Strategies

- Right-Size: Start with a smaller set of domain-based services. Don’t break everything at once.

- Use a Hybrid Approach: Keep a “modular monolith” for stable modules, microservices only for domains needing distinct scaling or autonomous deployment.

- Regular Reevaluation: Each quarter, check if any service is overkill or can be merged, or if a monolithic chunk should be splitted further.

10. Maintaining a Culture of Continuous Improvement

The Pitfall

- Rigid Architecture: Teams fail to adapt microservices architecture as domain changes or business evolves.

- Stagnation: Over time, new features get shoehorned, leading to “distributed monolith” anti-patterns.

- Poor Knowledge Sharing: If one expert leaves, others might not grasp certain microservices, leading to confusion or duplication.

Mitigation Strategies

- Regular Domain Review: Evaluate if boundaries still align with real business subdomains.

- Document: Keep an up-to-date architecture map. Encourage “docs as code” using PlantUML or the like for quick updates.

- Cross-Team Collaboration: Promote knowledge sharing with pair programming or architecture brown-bags, especially before major service expansions.

Conclusion

Shifting from a monolith to microservices is rewarding, but complex. Data consistency requires event-driven or saga-based approaches, testing gets layered to handle partial and full integration, and you risk service sprawl or complicated versioning if not carefully planned. By defining domain boundaries, adopting event-driven patterns, and investing in observability tools, you can mitigate the typical pitfalls. Keep the architecture flexible—some domains might stay within a modular monolith, while others flourish as microservices. Ultimately, success hinges on cultural readiness for continuous improvement and stable DevOps processes that unify your distributed system.